Modifying EKS Kubernetes Pod Application Behavior with AWS AppConfig Feature Flags

Posted April 5, 2023 by Trevor Roberts Jr ‐ 9 min read

The AWS AppConfig team recently released a container image for an AppConfig Agent that can be used as a sidecar in your Kubernetes pod or your ECS task to simplify feature management for cloud native applications on AWS. I decided to take the agent for a spin to see if it was as simple to integrate with containers as it is to integrate AppConfig with Lambda functions...

Disclaimers

If you will be following along with the configuration steps below, make sure you are using a non-production EKS cluster that you are allowed to make changes to! My EKS cluster was deployed using a tool called EKSCTL, and my workflow depends on using this tool for my cluster configurations. If there is interest in seeing how to configure a cluster using a popular IaC alternative like Terraform, let me know on Twitter, and I'll post a follow-up article accordingly.

Introduction

In a previous blog post, I showed how simple it was to integrate AWS AppConfig with your serverless workload. This prompted the question: "Can we get the same benefits with containerized workloads?"

Fortunately, the AppConfig team recently published a container image that can be used as a sidecar for your Kubernetes pods and your ECS tasks.

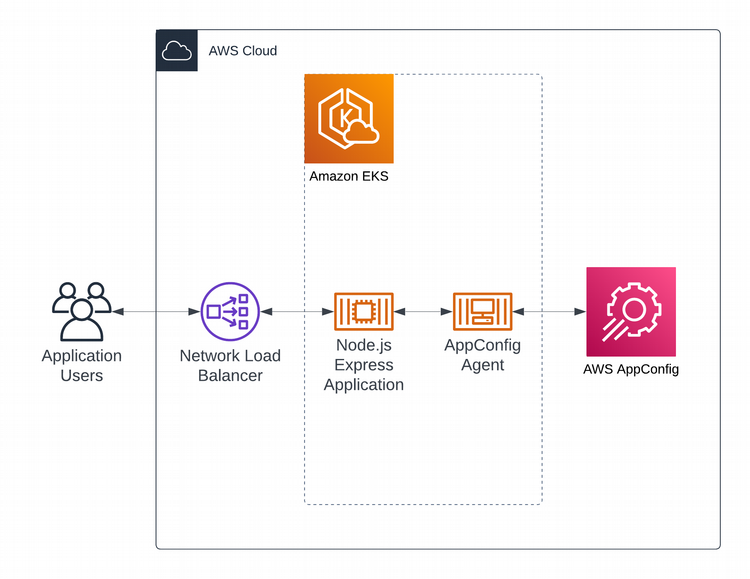

In this blog post, I show how to prepare your EKS environment to use the AppConfig Agent. I deploy a Kubernetes pod that contains a simple Node.js Express web server application that queries the AppConfig Agent sidecar container. The following diagram shows the component interactions that occur when users access the application.

At a high-level, these are the steps I followed to enable my containerized demo application to use AWS AppConfig feature flags:

- Deployed an EKS cluster

- Configured the EKS cluster to support IAM Roles for Service Accounts (IRSA)

- Created a Service Account for the Kubernetes pod to use to access AppConfig

- Deployed a Kubernetes pod consisting of two containers: my demo application and the AppConfig Agent sidecar

Now, let's get dive into the details!

Prepare your EKS Cluster

If you do not have an EKS cluster already running in your AWS account, you can see an example cluster deployment automation script for EKSCTL in my GitHub Repo.

| WARNING: Make sure to delete the cluster when you are done to avoid unexpected charges! |

|---|

First, your Kubernetes pod will need to have permission to speak with the AppConfig service. There are two ways to accomplish this:

- Assign IAM permissions to the Kubernetes node (Not a recommended practice)

- Use IAM Roles for Service Accounts

Why is IRSA recommended over assigning IAM permissions to the Kubernetes node? With IRSA, you can limit resource access to the pod that needs to access that resource. If you assign IAM permissions to the entire Kubernetes node, any pod on the node can access the resource. This may seem trivial for feature flag data. However, if your pod needs to access sensitive data stored in a DynamoDB table, in an S3 bucket, etc., it is important to control which pod specifically can access those resources.

Enabling IRSA

I used an open source tool called EKSCTL to deploy my EKS cluster. So, I'll be using it to configure IRSA in my EKS cluster.

In the sample commands that follow, my cluster name is appconfig-eks. If you are following along in this walkthrough, be sure to update the --cluster= flag to use your cluster's name.

This command configures an IAM OIDC provider and associates it with your EKS cluster's OIDC issuer URL to enable IRSA.

eksctl utils associate-iam-oidc-provider --cluster=appconfig-eks --approve

This command creates a new Kubernetes service account that will be associated with the IAM policy that I will use to access AppConfig. Note that I am using the AppConfigAllAccess managed policy since this is a test environment. However, I recommend you create your own read-only IAM policy for your specific feature flag(s) when planning for production.

eksctl create iamserviceaccount --cluster=appconfig-eks --name=appconfig --namespace=default --attach-policy-arn=arn:aws:iam::<YourAccountNumber>:policy/AppConfigAllAccess --approve

After completing these steps, your cluster is now ready to deploy pods that can use IRSA to securely access AWS resources.

My Application

I modified the sample serverless application from my previous AppConfig article to run as a Node.js Express application. The application now queries the AppConfig Agent for the feature flag every time a user makes a request. If you would like to see what the application looks like, I included screenshots towards the end of this article. The application source code be found on GitHub.

I will discuss important portions of the code below:

# server.js

// Define route for index page

app.get("/", function (req, res) {

request(

//AppConfig Agent API call

"http://localhost:2772/applications/blogAppConfigGo/environments/prod/configurations/whichSide?flag=allegiance",

function (error, response, body) {

if (!error && response.statusCode == 200) {

const data = JSON.parse(body);

res.render("index", { data: data });

} else {

// Handle error

res.render("error");

}

}

);

});

In the code above, I use the request library to make the API call to the AppConfig agent running in the sidecar container. The URL for the API call consists of the following values you need to retrieve from AppConfig:

- Application

- Environment

- Configuration

- Flag

You can get these values from the AppConfig console or CLI.

Since the pod containers run on the same node, I can make a call out to localhost on the port that the AppConfig agent is listening on: 2772 by default (port is configurable). The agent runs a simple web server that retrieves the requested feature flag on initial request and makes it available via REST. After that initial API call, the feature flag data is cached on the agent. The feature flag data is refreshed every 45 seconds by default (interval is configurable). The JSON data is then included in the render call using the index.ejs template.

Here is an example of the JSON data

{"choice":"paladin","enabled":true}

choice is my actual feature flag. enabled is an indication of whether the feature flag is enabled in AppConfig

<% if (data.choice == "paladin") { %>

<section>

...

</section>

<% } else { %>

<section>

...

</section>

<% } %>

EJS is a templating system that allows you to embed server-side JavaScript in HTML templates to affect the generated HTML returned to the web browser using dynamically-generated values. In this case, the AppConfig feature flag that I created, choice, is accessed as a key in the data JSON object from the API call.

After building my container image that contains my demo application and pushing it to ECR, I apply my Kubernetes Deployment manifest:

apiVersion: apps/v1

kind: Deployment

metadata:

name: appconfig-blog

labels:

app: appconfig-blog

spec:

replicas: 1

selector:

matchLabels:

app: appconfig-blog

template:

metadata:

labels:

app: appconfig-blog

spec:

serviceAccountName: appconfig

containers:

- name: my-app

image: <YourAccountNumber>.dkr.ecr.us-east-2.amazonaws.com/appconfig-blog-app:latest

imagePullPolicy: Always

- name: appconfig-agent

image: public.ecr.aws/aws-appconfig/aws-appconfig-agent:2.x

ports:

- name: http

containerPort: 2772

protocol: TCP

env:

- name: us-east-2

value: region

imagePullPolicy: Always

Notice that I specify my serviceAccountName that I created earlier to ensure that my pod has sufficient permissions to access AppConfig. I also specify the AppConfig agent's port number so that my demo application can access it.

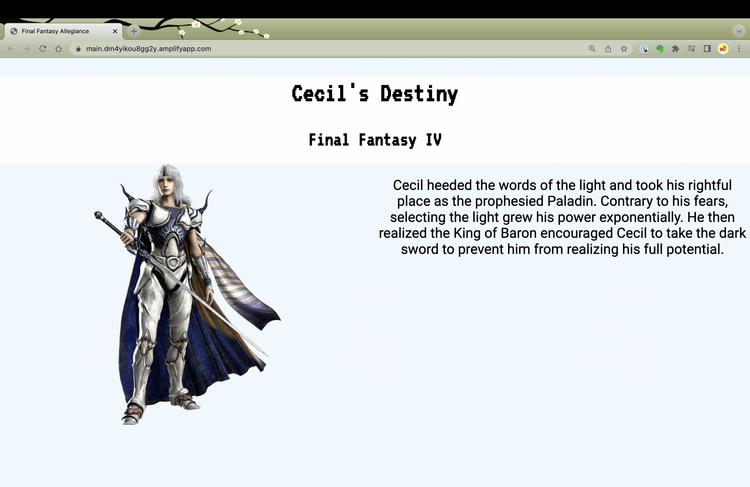

When I create a load balancer to access my application, I can see what the application looks like with the default choice of paladin:

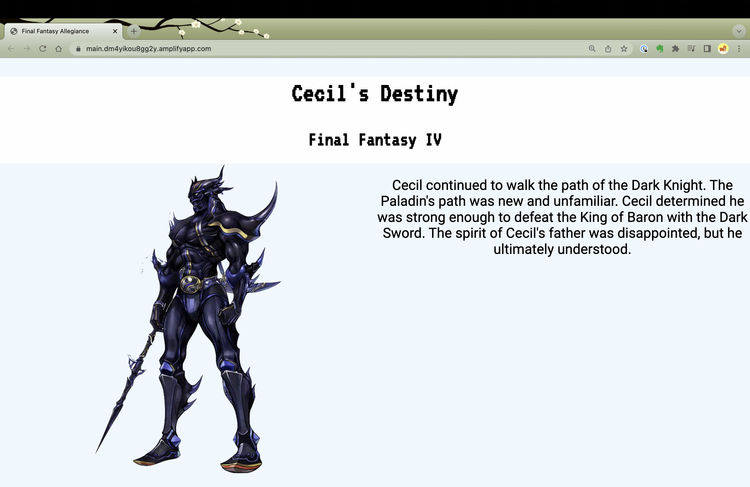

If I update my feature flag to the choice value of darkknight, the content associated with that feature flag value is displayed after the next AppConfig agent polling interval.

Items to Be Aware Of

-

The AppConfig Agent container image, as of April 4, 2023, only runs on Linux x86 servers. If you are interested in using the containerized AppConfig agent on Linux ARM servers or on Windows servers, be sure to give the service team that feedback via your AWS account team. This leads to the next item...

-

If you are writing software on Mac computers with Apple silicon, you will need to build your application image to run on Linux x86 servers. For example:

docker buildx build --platform=linux/amd64 -t appconfig-blog-app .

-

If you mistype your feature flag name, the agent will poll every interval for the incorrect name until the AppConfig Agent container is restarted. Aside from your feature flag not being accessible, error messages will enter your CloudWatch Logs for each incorrect poll. Make sure to check your AppConfig URL values carefully!

-

Make sure you set your IAM permissions appropriately. Otherwise, the AppConfig Agent container will fail to start when you deploy your pod.

Wrapping Things Up...

In this blog post, we discussed how to use the AWS AppConfig Agent to manage features in your containerized workloads running on Kubernetes and on ECS. We covered how to prepare your EKS cluster to support IAM roles for your Kubernetes pods to securely access AWS services. Finally, we learned how a containerized application can use the AppConfig agent to retrieve the required feature flags to dynamically modify its behavior.

If you found this article useful, let me know on Twitter!